Now Reading: What is the Difference Between Cloud Storage and Cloud Computing

-

01

What is the Difference Between Cloud Storage and Cloud Computing

What is the Difference Between Cloud Storage and Cloud Computing

Opening Scene: Why these two terms keep showing up in boardrooms and Slack channels

Think of the cloud as a city skyline: storage is the skyscraper where your files live, and computing is the powerplant and team of workers that open, remix, analyze, and serve those files to the rest of the city. People use “cloud” as shorthand and then mix the two up—understandable, because they arrive together in a very dramatic, high-rise way. But they play very different roles. One keeps your data safe and accessible; the other gives you the horsepower to do something meaningful with that data.

Functionality

Cloud storage is, at its heart, a place to put information you don’t want on your laptop or office server. Upload a spreadsheet, a photo library, a database backup—storage’s job is to keep it, index it, and hand it back when you ask. It’s optimized for availability, redundancy, and simple access patterns: read, write, sync.

Cloud computing, by contrast, is about action. It supplies virtual CPUs, memory, containers, managed databases, and platform services so software can run without you buying physical servers. Where storage says “I’ll keep your stuff,” computing says “I’ll run your app, transform your data, or serve your website.” In practice they’re complementary: stored data fuels compute jobs, and compute operations produce new data to be stored.

Pricing Models

The pricing between storage and compute feels like comparing a parking fee to a taxi meter. Cloud storage is mostly straightforward—pay for the bytes you use and sometimes for retrievals or transfers. It’s predictable for most persistence needs and scales gracefully as your archive grows.

Cloud computing pricing is more dynamic and can surprise you. Costs depend on CPU hours, instance types, memory sizes, network egress, managed services, and how efficiently your code runs. Short bursts of heavy processing or poorly optimized applications can spike bills quickly, so understanding usage patterns is critical if you want to avoid sticker shock.

Performance and Speed

Storage performance is judged by latency (how fast you can fetch a file) and throughput (how much data you can move in a given time). Cold archives are cheaper but slower; hot storage is faster and costlier. If your app needs immediate access to terabytes of data, storage design becomes a performance story.

Compute performance is judged by processing speed, concurrency, and how resources are allocated. The cloud can spin up many instances in seconds to handle spikes, but network latency, instance type, and architecture affect real-world speed. In cinematic terms: storage supplies the props, compute choreographs the scene—and both need to be timed perfectly for a good show.

Management and Maintenance

With cloud storage, maintenance looks like lifecycle policies, versioning, backups, and replication across regions. The provider keeps the disks and data centers healthy; you set the rules about retention, deletion, and access.

Cloud computing shifts maintenance to orchestration: updating images, patching runtimes, managing autoscaling, and monitoring health checks. Providers take care of the infrastructure, but the responsibility for software updates, performance tuning, and fault handling often rests with your team or the service’s managed offering.

Security and Compliance

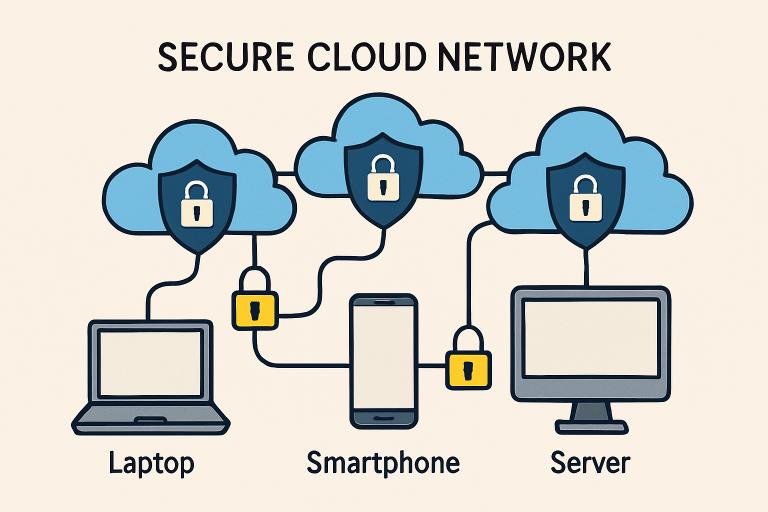

Both storage and compute require security guardrails, but their risks look different. Storage-centric security focuses on encryption at rest and in transit, access controls, and proper segregation so only authorized users or services can read or write data. Misconfigured buckets or lax policies are a common failure point.

Compute introduces runtime risk: executing code, processing third-party libraries, and interacting with external services. That raises concerns about runtime isolation, secure credential handling, and vulnerability management. Compliance needs—HIPAA, GDPR, PCI, and the like—will shape how you configure both storage and compute, from where data is hosted to how logs are retained.

Scalability and Flexibility

Scalability is where the cloud shines for both pieces of the puzzle. Storage scales almost transparently: add more data, and the provider absorbs the hardware complexity. Flexible tiers let you trade cost for access speed.

Compute scales differently: you can scale vertically (bigger machines) or horizontally (more machines). Cloud platforms make horizontal scaling easier with containers and managed services, so you can handle sudden traffic spikes or heavy batch processing without buying physical servers. The flexibility to mix instance types, serverless functions, and managed services gives architects a rich palette—but it also multiplies configuration choices.

Everyday Tradeoffs: Picking the right balance (without a sales pitch)

In practical terms, storage is the cost-efficient, persistent backbone for files and archives; compute is the elastic engine that turns those files into value—reports, models, web pages, or user experiences. Businesses often pay a little more attention to compute because it’s the active cost and the place where performance issues surface first, but storage choices affect costs, compliance, and downstream processing speed in ways that matter just as much.

FAQ

Are cloud storage and cloud computing the same thing?

No—storage is where data lives; computing is what you do with it. They work together but solve different problems.

Do I need both to run cloud applications?

Usually yes; even serverless apps rely on storage for logs, user files, or databases, while compute handles processing, APIs, and business logic.

Which one is cheaper to start with?

Storage typically has a lower entry cost per GB, while compute costs depend on usage patterns and can scale up quickly with heavy processing.

Can I move my data or compute between providers easily?

Data and workloads are portable in principle, but practical migration requires planning for formats, networking, and service differences.

Is the cloud more secure than on-premises servers?

Security depends on configuration and practices; cloud providers offer robust tools, but misconfiguration is a common source of risk on either side.

Will cloud computing always be faster than local servers?

Not always; network latency and instance selection matter, so for certain low-latency or specialized workloads, local infrastructure can still win.