Now Reading: What Really Keeps Your Apps Safe (It’s More Than Just Passwords)

-

01

What Really Keeps Your Apps Safe (It’s More Than Just Passwords)

What Really Keeps Your Apps Safe (It’s More Than Just Passwords)

The Hidden Threats Inside Your Code

Behind every screen tap and button press there’s a sprawling landscape of code—and tucked in there are mistakes that look tiny but behave catastrophically. These aren’t flashy problems you can spot with a glance; they’re logic stumbles, unchecked inputs, and assumptions the original devs made at 3 a.m. when the coffee ran out. Think SQL injection, cross-site scripting, and broken authentication: names that sound technical but really mean “someone found a clever way to turn your app against itself.”

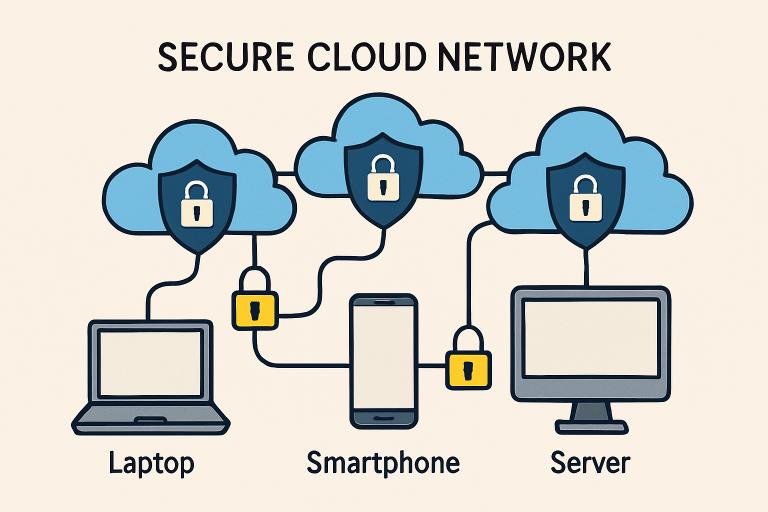

Why Passwords Aren’t Enough

Passwords are the visible lock on the front door, but most intruders don’t try that door first. They look for an open window—a forgotten API, a misrouted input field, an error message that spills secrets. A strong password is necessary, yes, but it won’t stop an attacker who can inject commands through a form or exploit a session flaw. Security that starts and ends with passwords is like painting the doorknob and calling the house secure.

What DAST Does (and Why It Matters)

Dynamic Application Security Testing, or DAST, walks a running app the way an adversary would. It’s a hands-on, watch-the-app-breathe kind of test: sending inputs, following links, poking buttons, and trying to break behavior while the software is live. DAST finds the kinds of flaws that only appear when code interacts with data, networks, and real user flows—issues that static scans simply can’t see. Best part? It doesn’t need the source code, so it’s ideal for testing third-party systems or opaque legacy apps.

The Mechanics of a DAST Scan

DAST tools spin up scenarios and watch responses—how a login page reacts to weird characters, whether a file upload strips dangerous metadata, how session cookies behave after a logout. The tool reports back with traces: the request that tripped the issue, the response that revealed it, and sometimes a reproduction path a developer can follow. It’s less about naming and shaming and more about handing the team a clear map to the weak stone in the wall.

How DAST Fits into DevSecOps

DevSecOps shifts security from an afterthought to a rhythm in the development lifecycle. Instead of shoehorning checks at the end, teams weave testing into builds, deploys, and sprint routines. DAST is a natural fit here: run it against staging, during CI/CD pipelines, or after a hotfix lands. It catches runtime problems that automated unit tests and code linters miss, and its findings can be triaged just like functional bugs—faster fixes, fewer surprises in production.

Tools That Work Alongside DAST

DAST is powerful, but it’s one instrument in an orchestra. SAST (Static Application Security Testing) parses source code for risky patterns before the app runs; it’s great for catching issues early. IAST (Interactive Application Security Testing) lives inside the running app and blends code-awareness with runtime observation. SCA (Software Composition Analysis) scans the open-source parts—libraries and packages—for known vulnerabilities. Together, they cover code before, during, and after execution, forming a layered defense that actually makes sense.

A Real-World Example: Banking App Nightmares (and Wins)

Imagine a mobile banking app built with attention to UX and bragging-rights encryption. Users log in with biometrics and a complex password—but a subtle validation bug in the “forgot password” flow allows a specific input to escalate privileges. Suddenly, the front-door access is irrelevant because the attacker used a logic flaw to walk past it. A DAST scan would mimic those user flows and flag the dangerous path in test environments, giving the devs a chance to patch the logic before customers notice anything odd.

The Human Side: Why Teams Need Processes, Not Silver Bullets

Tools catch things, but processes keep them from coming back. Security hygiene—regular scans, dependency updates, threat modeling, and clear incident playbooks—translates tool output into safer releases. Developers need readable reports, triage practices, and time to fix issues; security teams need realistic testing windows and environments that reflect production. When everyone speaks the same language—risk, exploitability, and impact—security becomes operationally achievable, not just aspirational.

Why This Matters More Than Ever

Apps run our lives now—banking, socializing, shopping, working. That concentration of personal and financial data is an irresistible target. Attackers are patient, clever, and relentless; one missed edge case can ripple into millions of affected users. Tools like DAST are the practical, real-world probes that reveal those edge cases, turning invisible bugs into actionable tickets. Put simply: in a world where apps breathe data, testing them while they’re alive is no longer optional.

FAQ

What is DAST in one sentence?

DAST (Dynamic Application Security Testing) is a method for testing an application while it’s running by simulating attacks to discover vulnerabilities that only appear at runtime.

How is DAST different from SAST?

SAST analyzes source code statically before execution, while DAST interacts with the live application to find issues that only appear during operation.

Do I need source code access to run DAST?

No—DAST works against the running app and does not require access to source code, which makes it useful for third-party and legacy systems.

Where in the pipeline should I run DAST?

Run DAST during staging and pre-production as well as periodically in CI/CD; frequent scans after each release or change help catch regressions early.

Can DAST find every security issue?

No—DAST uncovers many runtime flaws but should be used alongside SAST, IAST, and SCA for broader coverage.

Will DAST slow down my app?

DAST scans can be resource-intensive if run against production systems, so they’re typically executed in test or staging environments to avoid performance impacts.

How do teams act on DAST findings?

A clear triage workflow, risk scoring, and reproducible test cases allow developers to prioritize and fix issues efficiently.

Is DAST suitable for APIs and microservices?

Yes—DAST can test APIs and service endpoints by exercising their inputs and monitoring responses, though specialized configurations may be needed for complex service meshes.